The Power of Data: Calendar-based Policy Enforcement

A problem that is often discussed in the context of policy-as-code is how to get more people other than developers involved in policy authoring. Policy as code is still code, and while tooling and abstractions can help to some extent, the process still involves at least some level of development knowledge. While making policy authoring accessible to a larger audience certainly is a laudable goal—and tooling, like the Styra DAS policy builder, may help one move towards that goal—it’s not the only way to achieve getting more people involved in the policy process.

Open Policy Agent (OPA) makes policy decisions based not only on policy, but just as much on data. This data might be provided from any number of systems, possibly accessible to a far larger group of people than the policy developers of an organization. Calendars are a great example. Most organizations have calendars that are shared across the organization. These calendars are managed through the user interface of a calendar app such as Google Calendar, or Outlook. Anyone in the organization with write access to a shared calendar can easily add new events, or edit existing ones, and immediately have the changes visible to everyone else in the organization.

Calendar data isn’t just visible to calendar users though. Using the APIs associated with products like Google Calendar, or Microsoft Outlook, we are able to extract calendar data and use it in other services such as OPA. Building policies on this type of data allows anyone with access to a shared calendar to effectively control policy decisions without writing policy code.

In this blog we are going to explore using the Google Calendar API to build policies that make decisions based on calendar events. While the examples will be using Google Calendar, the principles are just as applicable for other calendar applications, as long as the calendar data is exposed through an API.

Example: controlling production deployments with calendar data

Do you deploy to production on Fridays? Proponents in the “no” camp normally point out the risks involved in pushing new code to production at a time when most people are heading out for the weekend and likely have little interest in working overtime to fix issues that the new code might introduce. Proponents in the “yes” camp on the other hand commonly talk about the importance of having a deployment culture where tests build a level of assurance that things won’t break, and the value of having established procedures for rollbacks in case things still go wrong.

Without taking a stand in the discussion, if we wanted to block production deployment on Fridays, what would such a policy look like? Perhaps something like this.

deny contains "No production deployments allowed on Fridays" if {

time.weekday(time.now_ns()) == "Friday"

}Though certainly effective for what it’s meant to do (blocking deployments on Fridays), a policy like the above doesn’t leave a whole lot of room for flexibility. What if we wanted to allow deploying on Fridays but only during certain windows of time? Friday mornings when everyone is around should be good, but changing the schema of the production database five minutes before the team is supposed to meet down at the pub might not be the best idea. We could modify our policy to take office hours into account, but with teams increasingly being distributed across time zones, what constitutes “office hours” isn’t as clear cut as it used to be. Additionally, Fridays are unlikely to be the only days of the year where production deployments are inadvisable. Public holidays, weekends and major company events might be other occasions where we’d want to restrict deployments to production.

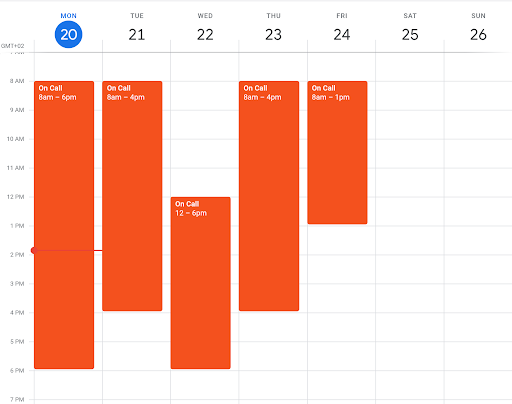

Let’s instead imagine we had a calendar dedicated to show when an organization had devops personnel on call that could deal with possible problems related to deployments. An on-call schedule like that might look something like the one below.

Using the Google Calendar API, we could extract the data from the on-call schedule and use it in our policies to be able to determine whether deployments to production should be allowed. In addition to a Google Calendar, we’ll also need a Google Cloud account in order to create a service account with which we’ll be able to query the Google Calendar API programmatically. Neither Google Calendar nor a Google Cloud service account comes with an associated cost, so if you’ll want to follow along and test this on your own you can do so without being billed.

Creating a Google service account

The first thing we’ll need to do is to set up a Google Cloud service account.

- From inside the Google Cloud console, search for “service account” and click the “Service Accounts” option from the drop down menu.

- Click the + CREATE SERVICE ACCOUNT button.

- In the following menu, give your service account a name, an ID and possibly, a description. Click “Create and continue”.

- Note down the ID of your service account. You’ll need it for later.

Next, click on the newly created service account and press the Keys tab. Click ADD KEY followed by Create new key. Choose JSON as the preferred format and download the file containing your service account credentials to your computer.

Share a Google Calendar

Next, we’ll need to share our calendar for our newly created service account.

- In Google Calendar, click the calendar you want to use and choose Settings and sharing.

- From the menu to the left, click Share with specific people. Add the service account ID (in the form of an email address) previously noted down.

- Finally, from the menu to the left, click Integrate calendar. Copy the Calendar ID value.

With all that done, we should now have a service account ID (and an associated credentials file) and a calendar ID. We are now ready to integrate against the Google Calendar API!

Scripting

In order to access our calendar ID, our service account and our signing key from a Rego policy without hard coding them, let’s export them as environment variables.

- export CALENDAR_ID=<Calendar ID>

- export SERVICE_ACCOUNT=<Service Account ID>

- export SIGNING_KEY=$(cat credentials.json | jq -r .private_key)

Authenticating against Google Cloud APIs

While Google provides SDKs for most popular programming languages to simplify the process of authentication against their APIs—and we could certainly use something like the Python SDK to fetch calendar data for later use in OPA—the process of obtaining an access token for authenticating against their REST APIs is simple enough that the process can be done entirely with Rego and it’s many useful built-in functions.

In order to obtain an access token, we must first authenticate against the Google Cloud OAuth 2.0 token endpoint. We do this by crafting a JSON Web Token (JWT) carrying the identity of the service account, and the scope of the requests we intend to make—in our case this will be to read Google Calendar events. The JWT is signed using the private key contained in the credentials file, and if found valid, exchanged for an access token which can then be used to query the Google Calendar API. More detailed information can be found in the Google documentation on the topic. Since Rego provides excellent support for both creating and verifying JWTs, we can use built-ins like io.jwt.encode_sign and crypto.x509.parse_rsa_private_key (introduced in OPA v0.33.0) to create an authentication token according to Google’s requirements:

now := time.now_ns()

now_s := round(now / 1000000000)

auth_token := io.jwt.encode_sign({"alg": "RS256", "typ": "JWT"}, {

"iss": opa.runtime().env.SERVICE_ACCOUNT,

"scope": "https://www.googleapis.com/auth/calendar.events.readonly",

"aud": "https://oauth2.googleapis.com/token",

"exp": now_s + 3600,

"iat": now_s,

}, crypto.x509.parse_rsa_private_key(opa.runtime().env.SIGNING_KEY))

The issuer of the authentication token is the service account ID, and the scope is set to read calendar events (and nothing more). Finally, we transform the PEM encoded SIGNING_KEY from our credentials file into a JSON Web Key (JWK) and use that to sign the token. Obtaining an access token from Google is now as simple as sending the auth token to their OAuth 2 token endpoint.

access_token := http.send({

"method": "POST",

"url": "https://oauth2.googleapis.com/token",

"headers": {"Content-Type": "application/x-www-form-urlencoded"},

"raw_body": sprintf(

"grant_type=urn:ietf:params:oauth:grant-type:jwt-bearer&assertion=%s",

[auth_token]

),

}).body.access_tokenQuerying the Google Calendar API

With an access token at our disposal, we are now ready to query the Google Calendar API, and the events endpoint specifically. The URL to list events consists of a base URL ( https://www.googleapis.com/calendar/v3/calendars/ ) followed by the calendar ID, optionally followed by any number of query parameters for controlling things like ordering, or the max number of events to return. To build a request for events, ordered by start time and only containing current and future events, we could do something like this.

url := concat("", [

"https://www.googleapis.com/calendar/v3/calendars/",

opa.runtime().env.CALENDAR_ID,

"/events?",

urlquery.encode_object({

"orderBy": "startTime",

"singleEvents": "true",

"timeMin": sprintf("%sT%s.000000Z", [

sprintf("%d-%02d-%02d", time.date(now)),

sprintf("%02d:%02d:%02d", time.clock(now)),

]),

}),

])With the full URL built, we’re ready to send the request using the http.send built-in, providing the previously obtained access token in the Authorization header of the request.

headers := {"Authorization": concat(" ", ["Bearer", access_token])}

calendar := {"events": [e |

o := http.send({

"method": "GET",

"url": url, "headers": headers

}).body.items[_]

f := object.filter(o, ["summary", "attendees", "start", "end"])

f != {}

e := with_timestamps(f)]

}

with_timestamps(o) = object.union(o, {

"start": {"timestamp": time.parse_rfc3339_ns(o.start.dateTime)},

"end": {"timestamp": time.parse_rfc3339_ns(o.end.dateTime)}

})With the response coming in, we iterate over the items object, and store each into a list of events. Since each event possibly contains a lot of information, we filter out only the attributes that we care for. In this example it is the summary (name) of the event, the start and end time. Since the start and end time are formatted for readability, we finally add them as timestamps, in order to better be able to query for things like “is event between this and that time” later.

Tying everything together, we can finally query the calendar rule, and store the output in a JSON file for later use in deployment policies:

opa eval -f raw -d events.rego data.google.calendar > calendar.jsonWould we look at the JSON representation of the calendar we saw before, it would look something like this:

{

"events": [

{

"end": {

"dateTime": "2021-09-21T16:00:00+02:00",

"timestamp": 1632232800000000000

},

"start": {

"dateTime": "2021-09-21T08:00:00+02:00",

"timestamp": 1632204000000000000

},

"summary": "On Call"

},

{

"end": {

"dateTime": "2021-09-22T18:00:00+02:00",

"timestamp": 1632326400000000000

},

"start": {

"dateTime": "2021-09-22T12:00:00+02:00",

"timestamp": 1632304800000000000

},

"summary": "On Call"

},

{

"end": {

"dateTime": "2021-09-23T16:00:00+02:00",

"timestamp": 1632405600000000000

},

"start": {

"dateTime": "2021-09-23T08:00:00+02:00",

"timestamp": 1632376800000000000

},

"summary": "On Call"

},

{

"end": {

"dateTime": "2021-09-24T13:00:00+02:00",

"timestamp": 1632481200000000000

},

"start": {

"dateTime": "2021-09-24T08:00:00+02:00",

"timestamp": 1632463200000000000

},

"summary": "On Call"

}

]

}Nice! Along with the on-call events, we can see exactly where each of them starts and ends. This makes it simple to write a policy for allowing deployments only when there are people on call.

Let’s create a policy for checking if someone is on call at any given time, including a rule that only allows deployments if someone is on call at the time of the deployment.

package deployments

import rego.v1

import data.events

default allow := false

allow if {

is_someone_on_call(time.now_ns())

}

margin := time.parse_duration_ns("30m")

is_someone_on_call(ns) {

some event in events

event.summary == "On Call"

event.start.timestamp <= ns

event.end.timestamp >= ns + margin

}To avoid having deployments go out just before the end of an on-call event, we add 30 minutes of margin to the calculation. Adding some tests to ensure our function works as intended is probably a good idea!

package deployments_test

import rego.v1

import data.deployments.is_someone_on_call

test_is_someone_on_call if {

is_someone_on_call(time.parse_rfc3339_ns("2022-01-01T02:00:00Z")) with data.events as [{

"summary": "On Call",

"start": {"timestamp": time.parse_rfc3339_ns("2022-01-01T01:00:00Z")},

"end": {"timestamp": time.parse_rfc3339_ns("2022-01-01T03:00:00Z")},

}]

# Within duration, but not when including margin

not is_someone_on_call(time.parse_rfc3339_ns("2022-01-01T02:00:00Z")) with data.events as [{

"summary": "On Call",

"start": {"timestamp": time.parse_rfc3339_ns("2022-01-01T01:00:00Z")},

"end": {"timestamp": time.parse_rfc3339_ns("2022-01-01T02:15:00Z")},

}]

# Not within duration

not is_someone_on_call(time.parse_rfc3339_ns("2022-01-01T02:01:00Z")) with data.events as [{

"summary": "On Call",

"start": {"timestamp": time.parse_rfc3339_ns("2022-01-01T01:00:00Z")},

"end": {"timestamp": time.parse_rfc3339_ns("2022-01-01T02:00:00Z")},

}]

}You can find the full code used in these examples here.

Next steps

From here we could extend our policy in a number of ways. Perhaps we could use the attendees attribute in the calendar data to check which people are actually on call, and only allow deployments if their skills match the type of deployment going out, so that database migrations require a DBA on call, infrastructure changes require someone from operations, and so on. Or maybe we could find a use case where the location of an event controls the outcome of a policy decision? The detailed description of an event contains all the attributes you might find in the Google Calendar data. Make sure to check it out!

We could also imagine adding “negative” events, during which we’d rather not see new code deployed to production. Such events might include a keynote speaker doing a live demo of the flagship product, or the company hosting a party for the tech department.

For a real deployment scenario, we would probably want to run our calendar extraction policy as part of a scheduled job, and build a bundle including the data plus our deployment policy, and have that pushed at regular intervals for consumption by the OPAs guarding deployments.

Wrapping up

As we have hopefully established by now, policy itself constitutes only half of an informed policy decision. Providing relevant data from external sources like public APIs, databases and other services means not only that we can make more granular decisions, but also that we may engage a much larger group of people than those traditionally involved in the policy creation process.

I hope the blog post provided some new insights, and that you’re already considering what data out there might help to drive policy decisions in your organization, and what using external data might mean in terms of opening up the policy process to new stakeholders.

Oh, and as an added bonus – the way we set up authentication against the Google Calendar API works just the same for all of their APIs, with only minor modifications for things like scope needed. Let’s go find some policy data!